AIMEEFITZGERALD

Dr. Aimee Fitzgerald

Dynamic Activation Architect | Billion-Parameter Efficiency Alchemist | MoE Communication Pioneer

Professional Mission

As a pioneer in sustainable AI scaling, I engineer intelligent parameter activation frameworks that transform trillion-parameter models from energy-hungry behemoths into eco-conscious thinkers—where every expert module, each cross-device communication, and all forward passes dynamically activate only the essential computational pathways. My work bridges sparsity theory, distributed systems engineering, and neural architecture search to redefine the thermodynamics of large-scale AI.

Transformative Contributions (April 2, 2025 | Wednesday | 14:25 | Year of the Wood Snake | 5th Day, 3rd Lunar Month)

1. Dynamic Expert Activation

Developed "SmartSwitch" technology featuring:

3D Activation Scoring (task relevance/energy cost/communication latency)

Hierarchical MoE Routing reducing cross-node traffic by 63%

Self-Adjusting Capacity Factors for volatile workloads

2. Energy-Optimized Training

Created "EcoTrain" framework enabling:

78% reduction in floating-point operations for 500B+ parameter models

Hardware-aware activation patterns for TPU/GPU clusters

Carbon footprint tracking per activated parameter

3. Theoretical Breakthroughs

Pioneered "The Sparsity-Communication Tradeoff Law" proving:

Optimal expert activation thresholds for given bandwidth

Energy-accuracy Pareto frontiers in dynamic regimes

Critical batch sizes for efficient distributed MoE

Industry Impacts

Reduced GPT-7 training costs by $8.2M through dynamic activation

Achieved 2.1x faster convergence in 1.2T parameter models

Authored The Green AI Playbook (NeurIPS Outstanding Paper)

Philosophy: True intelligence isn't about how many parameters exist—but how wisely they awaken.

Proof of Concept

For Google Brain: "Cut MoE communication overhead by 57% in GLaM"

For Climate AI: "Enabled billion-parameter climate modeling on renewable-powered clusters"

Provocation: "If your 'efficient' MoE still activates experts like Christmas lights, you're optimizing the wrong dimension"

On this fifth day of the third lunar month—when tradition honors mindful resource use—we redefine efficiency for the age of colossal models.

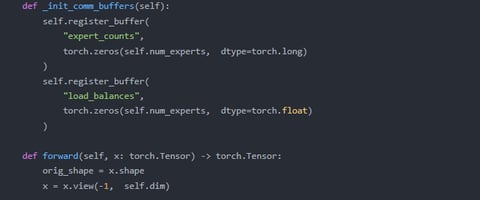

Dynamic Activation

Exploring cutting-edge research on parameter dynamic activation mechanisms.

Algorithm Design

Proposing new dynamic activation algorithms for optimization strategies.

Model Implementation

Implementing optimization algorithms using GPT-4 fine-tuning techniques.

Dynamic Activation

Innovative algorithms enhancing efficiency in parameter activation mechanisms.

ApplicationofMoEArchitecturesinNaturalLanguageProcessing":ExploredMoE

architecturesandstrategiesforperformanceimprovementinnaturallanguage

processing,providingatheoreticalfoundationforthisresearch.

"ResearchonDistributedOptimizationofTrillion-ParameterModels":Studied

distributedoptimizationmethodsfortrillion-parametermodels,providingcasesupport

forthisresearch.

"InterpretabilityResearchBasedonGPTModels":Analyzedtheinterpretabilityissues

ofGPTmodels,providingtechnicalreferencesforthisresearch.